Generative AI can produce new content from a command prompt written by the user. ChatGPT is the most known example.

Important concepts of generative AI

Generative Adversarial Networks (GAN)

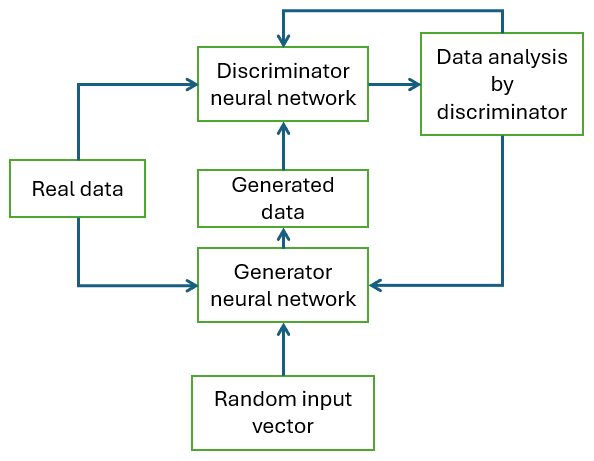

Two deep learning neural networks receive a great volume of data from many sources, and they identify patterns, relations and structures. The generator neural network produces new data with random variations of original data. While the discriminator neural network compares data produced by the generator with training data, to verify data veracity from generator.

Both networks receive information about the verification result if data is true or false. Then, the generator produces content to induce the discriminator to classify generated information as true, at the same time, discriminator learns from classification mistakes and improves its capacity to verify if the information is true or false. Training ends when the discriminator can’t differentiate real data from data produced by generator.

Transformer

It was introduced in 2017, in an article called “Attention is all you need”. It’s a neural network architecture that executes tasks in Natural Language Processing. Consists of an encoder and a decoder. The encoder receives a phrase, divides it into words or pieces of it into tokens. Each token has a numeric sequence, or vector, called embedding, which represents the word’s meaning, position in the phrase, context, etc. Decoder receives the vectors, calculates the weights of each one and produces data on the output based on vector’s values.

In addition to translations, it also serves for text creation and summarization. Some transformers have many encoders and decoders for better quality results.

Diffusion models

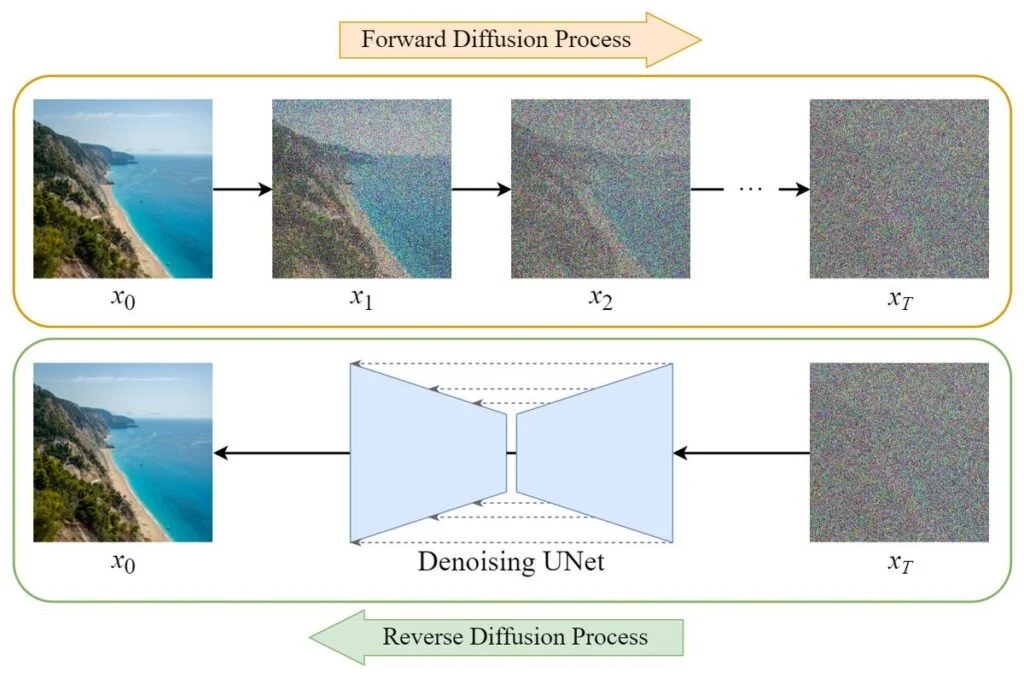

They are used in the stable diffusion process, which produces images from text. Diffusion models make the forward and reverse diffusion processes.

Generative AI limitations

Generative AI can make translations, produce coherent texts, audios, videos and programming language codes. In addition to a great quantity of data, it’s also necessary to have a high processing speed. However, it can produce hallucinations, which are incorrect information from incorrect, ambiguous and incomplete data input.

More details about these and other generative AI concepts will be explained in future posts.